PREVIEW OF THE BOOK:

In the early 1990s, our long accepted (cc 1926) understanding of how a nerve encodes and conveys information was unexpectedly overturned by experiments on fast flying bats and insects. Around 1995, we began to realize we no longer knew what neurons actually do.

In the 31 years since, many competing and overlaid hypotheses have been advanced, suggesting various alternate neural encoding schemes. But the question of how a nerve communicates remains unanswered. It is a huge, gaping hole, at the most basic level, in our understanding of how the nervous system works.

It is possible we are thinking about the nerve impulse in the wrong way. We have known since the 1850s that a nerve impulse’s voltage, or height, is fixed and locked. This makes the impulse appear to be an all-or-none, yes-or-no, zero-or-one type of spike. This is ideal for a digital computer but the brain feeds on graded analog signals like the brightness of a light, the loudness of a sound, the hotness of a pot on the stove. How could a digital, all-or-none, zero-or-one spike convey graded analog information?

Because a nerve impulse cannot vary in amplitude it is almost universally (though incorrectly) assumed in neuroscience that a single spike cannot be made to convey analog information. However, it was remarked in the 19th century that two successive nerve impulses, traveling in tandem up a nerve fiber, nicely mark the beginning and the end of an interval. This interval between successive impulses can indeed be easily varied — lengthened or shortened like a rubber band in proportion to a varying analog input signal such as the intensity of incoming light.

Accordingly, in textbook neuroscience, each single nerve impulse is regarded as meaningless, but two or more impulses — typically trains of impulses — can convey analog information to the brain. The idea works best if intervals between spikes are shortened as an analog input gets bigger, and stretched out if the analog input is mild. In this traditional view of the nervous system a strong stimulus (hot stove) would be encoded as a rapid-fire high frequency pulse train, and a gentle stimulus would be encoded as a low frequency pulse train.

There are many other encoding schemes for pairs and trains of impulses and a substantial literature on neural codes. Some are quite sophisticated.

They could all be wrong. This is because their going-in assumption — that a single nerve impulse of fixed amplitude cannot convey analog information — is not true.

Suppose for a moment that an all-or-none nerve impulse has access to multiple transmission channels — to a cable rather than a wire. Given multiple channels, a single all-or-none impulse could easily assume one of many different numerical values, not just a digital 1. If there were 100 channels, for example, then the impulse could communicate any value in a range from 1 to 100, depending on which channel the impulse travels. In a neuron with multiple channels, a single, seemingly blank, all-or-none nerve impulse can convey finely graded analog information in real time.

This book is about what would happen if, as a thought experiment, we were to rewire the human nervous system using a multichannel neuron. It explores the impact of this hypothetical “smarter” neuron on vision, memory and the brain.

The ancient anatomist who first isolated a big nerve probably thought it was an integral structure – one wire. Closer scrutiny revealed the nerve comprises a bundle of individual neurons. We are proposing yet another zoom-down in perspective, this time to the molecular level: In this model, each axon within a nerve bundle is itself composed of about 300 longitudinal channels created by linking adjacent sodium channels.

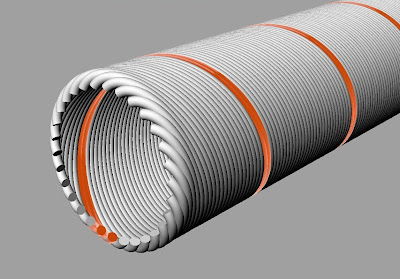

Multichannel neuron membrane. A single channel marked in orange is firing. The device is an analog-digital hybrid.

Multichannel neuron membrane. A single channel marked in orange is firing. The device is an analog-digital hybrid.

A nerve impulse traveling along a multichannel axon is digital on its face. It is an all-or-none spike. To a voltmeter, each nerve impulse looks like every other nerve impulse. Its analog information content is unsuspected and undetected. But to the brain, each arriving nerve impulse communicates an analog gradation denoted by a specific channel number — an integer such as 2, 3, 4, 17, or 131.

The theoretical payoff is enormous. Suddenly the brain, which operates on impulses moving at velocities barely better than highway speeds – becomes in theory a dazzlingly fast and competent thinking machine. Which is, of course, exactly what the brain is in real life.

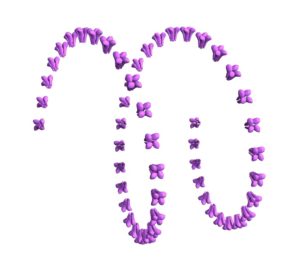

A single longitudinal Na channel modeled by linking adjacent unit sodium channels. This channel is wound as a helix around the central axis of an axon. Each unit sodium channel is represented by its four highly homologous transmembrane domains. It is linked to its neighbor by a hypothetical protein moiety which is not shown.

A single longitudinal Na channel modeled by linking adjacent unit sodium channels. This channel is wound as a helix around the central axis of an axon. Each unit sodium channel is represented by its four highly homologous transmembrane domains. It is linked to its neighbor by a hypothetical protein moiety which is not shown.

The new neuron model requires that we imagine adding an additional fillip – a protein moiety — to the increasingly well-understood biochemistry of the sodium channels. Small and subtle changes at the level of the neuron have billionfold consequences, and so one quickly arrives at a very different neuroscience.

Sixteen chapters have been completed and posted. The seventeenth chapter is currently in progress.

1. One Spike is Enough. In 1995, our long accepted understanding of how nerves encode information was unexpectedly overturned by experiments on fast flying bats and insects. Today, 31 years later, no alternative neural code has emerged as definitive. What if no code exists?

2. The Corduroy Neuron. A more sophisticated neuron is one possible solution. How it works. What it requires at the membrane level. The model is codeless. No encoding, no clocking, no processing, no decoding. A much faster way to move information. The model is able to explain why a nerve’s firing frequency will sometimes vary with stimulus intensity, as Adrian discovered in 1926. And why, under most circumstances, a single spike will suffice.

3. Impulses that travel without action potentials. The model suggests a “silent” impulse may exist. In this hypothesis, undetected impulses conveyed by lines of voltage sensors — wires, essentially — are the basis of communication in dendrites, in photoreceptors, in internodes, and in the miniaturized nervous system of the famous nematode, C. elegans.

4. Eye-Memory. Why the retina was once regarded as a prototypical memory organ by computer designers, notably John von Neumann.

5. The retina conserves spatial phase. What is spatial phase? What would you see if the eye conserved it? Everyday reality.

6. Gems in a junkyard. Double diffraction imaging and the classical holographic memory theories of 1963-73.

7. What does a memory look like? The retina reconsidered as a bipartite system, able to capture both an image plane and its corresponding Fourier plane simultaneously. What we see and what we store.

8. Standing waves in photoreceptors: An obscure but fascinating literature suggests both rods and cones could detect color, intensity and spatial phase. And yes, they did say rods could detect color.

9. Rods and cones as wave detectors. To detect standing waves the photoreceptor cell, which is conventionally understood as a particle detector, must operate as a wave detector. It absolutely requires a mirror. How does the ribbon synapse function in this system? Sodium pump inversion. Nerve impulses that can travel without action potentials.

10. Eye evolution. Eyes before lenses. Why the retina looks backwards. Imagine two optical systems, a mirror and a lens, crammed into one eyeball. The mirror is now just a vestige of the original, but perhaps it set the course of vertebrate eye evolution.

11. Reverse transcriptase writes memories. Memory as a massive infection of the cerebral hemispheres. The 30 fossil retroviruses in the human genome, and the recording machine they brought to us.

12. The retina of memory. The Victorians saw the human visual memory as a photograph. They thought the brain was clicking away constantly though the aperture of the eye. This charming idea was obliterated in the mid-20th century by Hubel and Wiesel and the higher concept of “feature detector” neurons, but this idea, in turn, took a torpedo in the 1990s. Maybe the Victorians were right.

13. The mind as an eye. The brain modeled as a functional replica of the eye. In this hypothetical, lightless eye of the mind, what structure corresponds to retinal photoreceptors? What does arborization accomplish?

14. How does visual memory work? In the familiar neural network model of memory, the memory is distributed and there are no addresses. In contrast, in a biological memory store modeled as “a thing in a place” that place has a distinct address. It is numerical.

15. The brain of the Denisovan girl. From a tiny finger bone discovered by archaeologists in the Denisova cave, it was possible to sequence the genome of a 5-year old girl who lived there 80,000 years ago. A comparison of her genes with ours triggers some speculations about visual memory, verbal memory, talking apes, photographic memory — and the mechanics of synesthesia.

16. The two brains. The emergence of verbal language from an archaic communication system based on synesthesia. The notion is plausible because both the eye and the ear perform Fourier transformations on incoming sensory information.

17. (in progress) Problems and observations. How and why memory could end up in DNA. What the sequence might look like.